Author: Bekah Funning - Page 3

Talent Strategy for Generative AI: How to Hire, Upskill, and Build AI Communities That Work

Learn how to build a real generative AI talent strategy in 2025: hire for hybrid skills, upskill effectively with hands-on learning, and create communities where AI knowledge actually sticks.

IDE vs No-Code: Choosing the Right Development Tool for Your Skill Level

Learn how to choose between IDEs and no-code platforms based on your skill level, project needs, and workflow. Discover when to use Bubble, VS Code, Mendix, or AI tools in 2025.

Refusal-Proofing Security Requirements: Prompts That Demand Safe Defaults

Refusal-proof security requirements eliminate insecure defaults by making safety mandatory, measurable, and automated. Learn how to write prompts that force secure configurations and stop vulnerabilities before they start.

Governance Committees for Generative AI: Roles, RACI, and Cadence

Learn how to build a generative AI governance committee with clear roles, RACI structure, and meeting cadence. Real-world examples from IBM, JPMorgan, and The ODP Corporation show what works-and what doesn't.

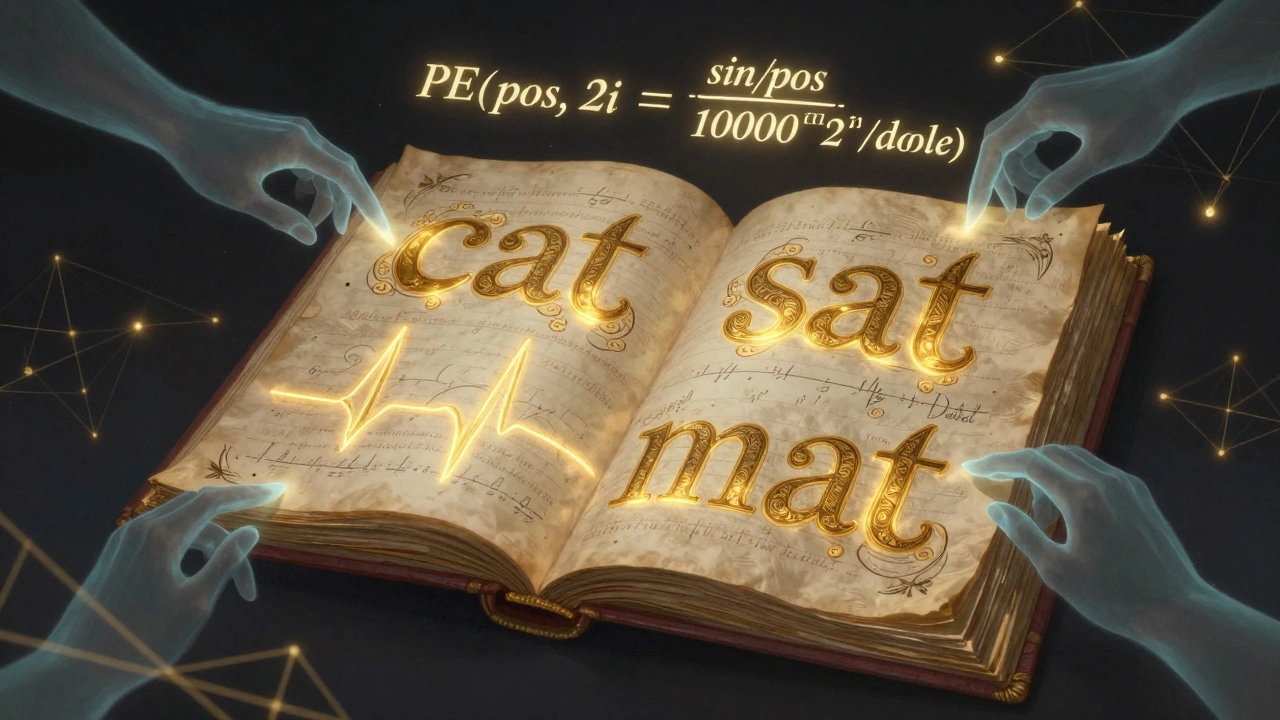

Positional Encoding in Transformers: Sinusoidal vs Learned for Large Language Models

Sinusoidal and learned positional encodings were the original ways transformers handled word order. Today, they're outdated. RoPE and ALiBi dominate modern LLMs with far better long-context performance. Here's what you need to know.

Benchmarking Vibe Coding Tool Output Quality Across Frameworks

Vibe coding tools are transforming how code is written, but not all AI-generated code is reliable. This article breaks down the latest benchmarks, top-performing models like GPT-5.2, security risks, and what it really takes to use them effectively in 2025.

About

Tri-City AI Links connects AI innovation across Hillsboro, Beaverton, and Portland with curated news, events, startups, jobs, and resources. Discover regional collaboration in artificial intelligence.

Terms of Service

Terms of Service for Tri-City AI Links, an informational blog connecting AI ecosystems in three Oregon cities. Covers content use, copyright, disclaimers, and liability under U.S. law.

Privacy Policy

Tri-City AI Links collects no personal data beyond standard analytics and cookies. Learn how your browsing info is used and your rights under CCPA for this AI ecosystem blog.

CCPA

Learn about your CCPA/CPRA rights regarding personal data collected by Tri-City AI Links. Exercise your right to know, delete, or opt out of sharing of your information.

Contact

Contact Tri-City AI Links to connect with the AI ecosystems of Hillsboro, Portland, and Eugene. Share resources, ask questions, or explore collaborations in regional AI innovation.

Model Distillation for Generative AI: Smaller Models with Big Capabilities

Model distillation lets you shrink large AI models into smaller, faster versions that keep 90%+ of their power. Learn how it works, where it shines, and why it’s becoming the standard for enterprise AI.