Tri-City AI Links

Incident Response Playbooks for LLM Security Breaches: What Works and What Doesn’t

LLM security breaches require specialized response plans. Learn how incident response playbooks for prompt injection, data leakage, and safety breaches work - and why traditional cybersecurity tools fail to stop them.

Multimodal Vibe Coding: Turn Sketches Into Working Code Fast

Multimodal vibe coding lets you turn sketches and voice commands into working code in minutes. Learn how AI tools like GitHub Copilot Vision are changing software development-and why some teams love it while others avoid it.

How Large Language Models Learn: Self-Supervised Training at Internet Scale

Large language models learn by predicting the next word in massive amounts of internet text. This self-supervised approach, powered by Transformer architectures, enables unprecedented scale and versatility-but comes with costs, biases, and limitations that shape how they're used today.

Funding Models for Vibe Coding Programs: Chargebacks and Budgets

Vibe coding slashes development time but creates unpredictable costs. Learn how chargebacks happen, why flat-rate plans fail, and how to build real budgets for AI-driven development.

AI Pair PM: How AI Agents Are Automating Product Requirements from Draft to Final

AI Pair PM uses autonomous agents to generate and refine product requirements, cutting PRD creation time from days to hours while improving accuracy and alignment across teams. This is the future of product management.

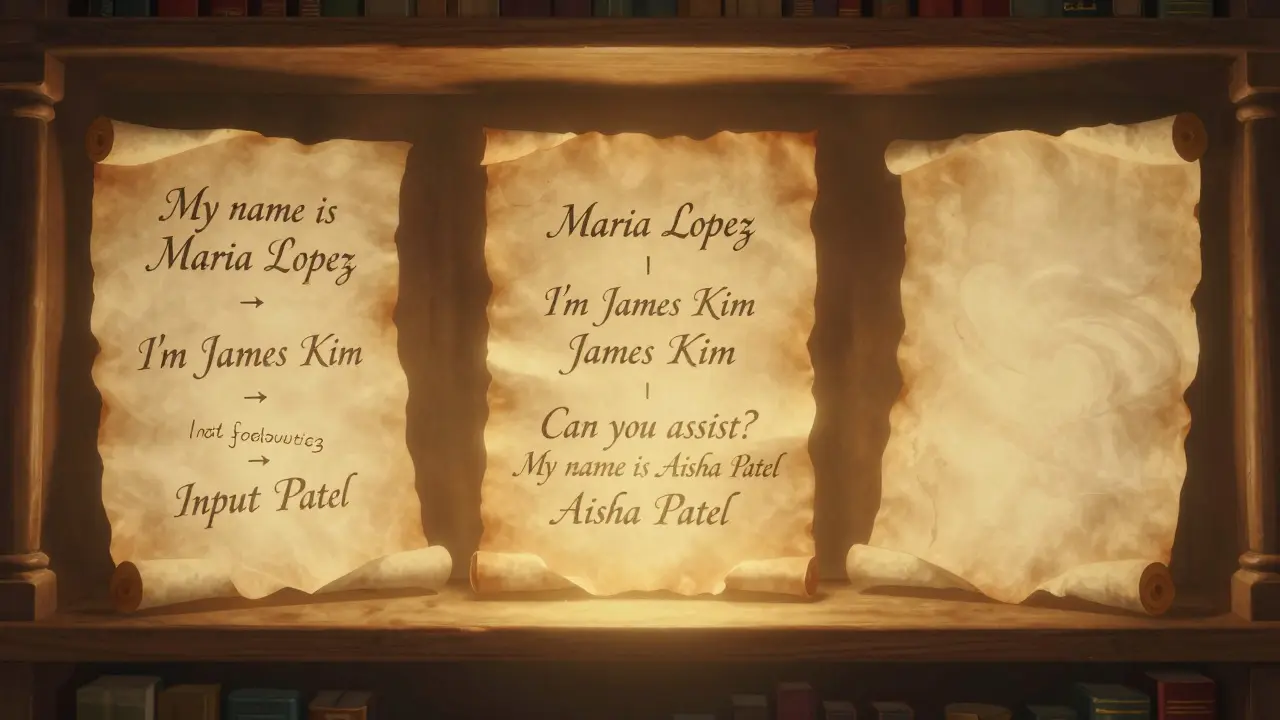

Few-Shot Prompting Strategies That Boost LLM Accuracy and Consistency

Few-shot prompting boosts LLM accuracy by 15-40% using just 2-8 examples. Learn how to choose the right examples, avoid over-prompting, and combine it with chain-of-thought for better results - without fine-tuning.

Speculative Decoding for Large Language Models: How Draft and Verifier Models Speed Up AI Responses

Speculative decoding speeds up large language models by using a fast draft model to predict tokens ahead, then verifying them with the main model. It cuts response times by up to 5x without losing quality.

Communicating Governance Without Killing Velocity: Dos and Don'ts in Software Development

Learn how to communicate governance in software teams without slowing down velocity. Discover practical dos and don'ts from top tech companies that balance compliance with developer autonomy.

Logit Bias and Token Banning in LLMs: How to Control Outputs Without Retraining

Logit bias and token banning let you steer LLM outputs without retraining. Learn how to block unwanted words, avoid model workarounds, and apply this technique safely in real-world AI systems.

Liability Considerations for Generative AI: Vendor, User, and Platform Responsibilities

In 2026, generative AI liability is no longer theoretical. Vendors, users, and platforms all share legal responsibility when AI causes harm. New laws in California and New York are enforcing transparency, disclosure, and accountability across the AI supply chain.

Why Functional Vibe-Coded Apps Can Still Hide Critical Security Flaws

Vibe-coded apps built with AI assistants may work perfectly but often hide critical security flaws like hardcoded secrets, client-side auth bypasses, and exposed internal tools. These flaws evade standard testing and are growing rapidly - here’s how to spot and fix them.

Evaluating New Vibe Coding Tools: A Buyer's Checklist for 2025

No such thing as 'New Vibe Coding Tools' in 2025. Here’s what actually matters when choosing an AI coding assistant: real features, pricing, offline support, and how well it fits your stack. Skip the hype and test the tools that work.