AI is writing your code now. But is it writing it right?

You open your IDE. You type a comment: "Create a function that fetches user data from the API and formats it as JSON". A second later, the IDE fills in 15 lines of clean, working Python. No typing. No searching Stack Overflow. No debugging syntax errors. That’s the promise of AI code generators like GitHub Copilot, Amazon CodeWhisperer, and CodeLlama. And for many developers, it’s already saving hours every week.

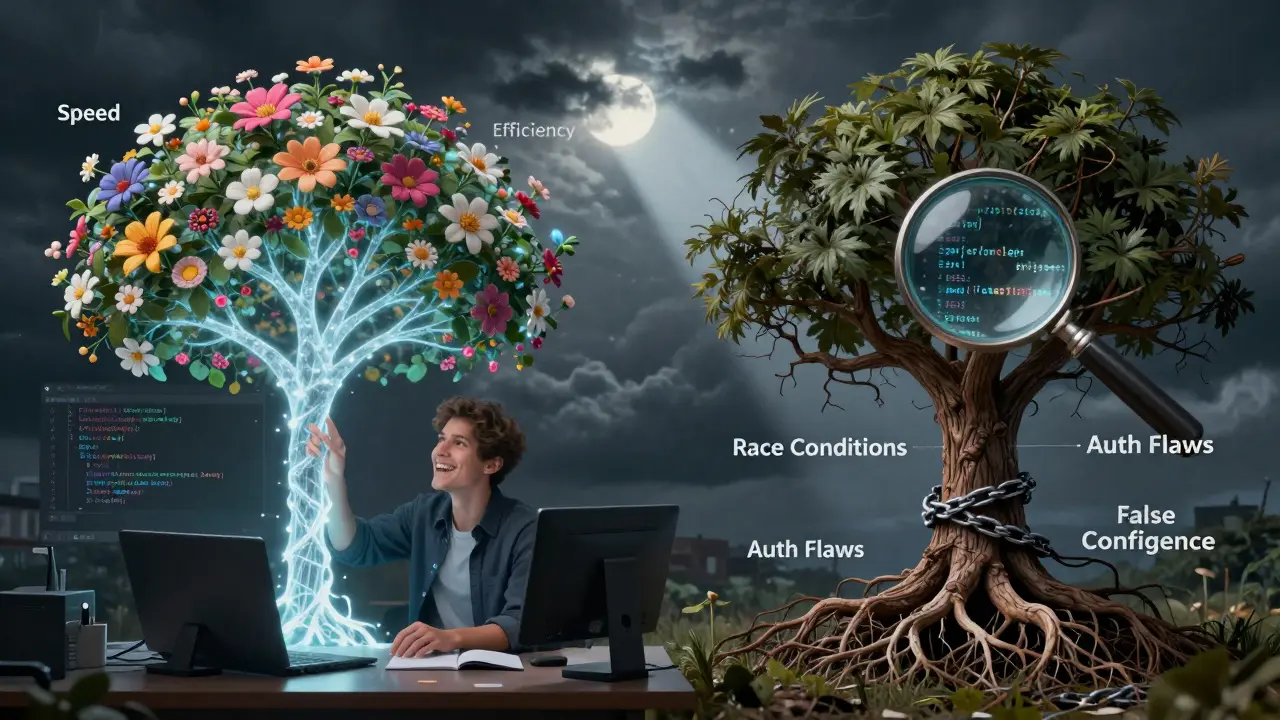

But here’s the catch: every time that AI writes code for you, it’s also hiding a risk. That function might work perfectly in tests-but fail silently when a user sends malformed input. It might use a deprecated library. Or worse, it might open a security hole you didn’t even notice. The truth isn’t that AI code tools are magic. They’re powerful junior developers who never sleep, never get tired… and never question their own mistakes.

Let’s cut through the hype. What do these tools actually do well? Where do they fall apart? And how can you use them without turning your codebase into a minefield?

What’s actually getting faster?

GitHub’s internal data from 2022 showed Copilot users completed tasks 55% faster. That number sticks because it’s real-for the right kinds of work. If you’re building a CRUD interface, wiring up an API endpoint, or writing unit tests for a simple function, AI tools are unbeatable. They’ve seen millions of examples of exactly this stuff. They don’t need to remember how to structure a Django model or what headers to send in a POST request. They just generate it.

Real developers report the same thing. On Reddit, u/code_warrior99 said Copilot saves him 2-3 hours a day on boilerplate. That’s not fluff. That’s real time back-time you used to spend copying old code, fixing indentation, or hunting down the right syntax for a list comprehension in Python. The same pattern shows up in G2 reviews: 68% of users say AI tools reduce context switching. You stop jumping between your code, docs, and search tabs. The AI fills the gaps.

But here’s what nobody talks about: the only tasks AI improves are the ones you already know how to do. If you’re stuck on a logic problem, AI won’t help you think. It’ll just give you something that looks right. And that’s dangerous.

The hidden cost: debugging what you didn’t write

Here’s a common story: You ask the AI to generate a function that validates user passwords. It returns code that looks perfect. It passes your unit tests. You merge it. A week later, your security team flags it: the function allows empty passwords because it only checks length, not content. The AI didn’t understand the requirement. It just guessed based on patterns it saw in training data.

This isn’t rare. A 2024 ACM Digital Library study found that 37.2% of AI-generated cryptographic functions were broken. Another study from the IEEE Symposium on Security and Privacy showed 40.2% of AI-generated authentication code had critical flaws. And here’s the kicker: junior developers using Copilot produced code with 14.3% more vulnerabilities than senior devs coding manually.

Why? Because AI doesn’t understand security. It doesn’t understand state. It doesn’t understand edge cases. It sees "password validation" and generates something that looks like other password validation code it’s seen. But real security isn’t about patterns-it’s about intent. And AI doesn’t have intent.

So now you’re not just writing code. You’re debugging AI-generated code. And that’s slower. Experts like Dr. Dawn Song at UC Berkeley call this the "semantic correctness gap"-code that passes tests but fails in real use. That gap adds time. A 2025 arXiv survey of 127 papers found that while AI boosts speed by 35-55% on routine tasks, it cuts productivity by 15-20% on complex ones because of the debugging overhead.

Who’s winning: Copilot, CodeWhisperer, or open-source?

Not all AI code tools are equal. Here’s how the big three stack up on the HumanEval benchmark (a standard test for code correctness):

| Model | Pass@1 Accuracy | Cost | Best For |

|---|---|---|---|

| GitHub Copilot | 52.9% | $10/month (individual) | General development, IDE integration |

| Amazon CodeWhisperer | 47.6% | Free (with AWS account) | AWS service integration |

| CodeLlama-70B | 53.2% | Free (open-source) | Customization, on-prem deployment |

GitHub Copilot leads in adoption-63% of professional developers use it, per Stack Overflow’s 2024 survey. It works everywhere: VS Code, JetBrains, even Vim. But it’s closed-source. You don’t know what it’s learned, and you can’t tweak it.

CodeWhisperer is cheaper if you’re already in AWS. It’s good at generating code that uses AWS SDKs, but it’s less accurate on general programming tasks. CodeLlama is the only open-source option that comes close in performance. If you need to run it on your own servers, avoid vendor lock-in, or train it on your internal code, it’s the only real choice.

But here’s the twist: accuracy isn’t everything. A 2024 MIT study found that developers using Copilot were 55% faster at finishing tasks-but spent 32% more time reviewing code. The tool that saves the most time isn’t always the one that saves the most effort.

Where AI code tools fail-every time

There are three kinds of problems AI code generators consistently get wrong:

- Concurrency: Race conditions, deadlocks, thread safety. AI has no intuition for timing. It generates code that looks parallel but isn’t safe.

- State management: Complex UIs with multiple interacting components. AI doesn’t understand how state flows or when to re-render.

- Security-critical logic: Authentication, encryption, input sanitization. AI treats security like a pattern, not a rule.

These aren’t edge cases. They’re the foundation of real software. If you’re building a banking app, a healthcare system, or even a login page with password reset, you can’t trust AI to write that code.

Worse, AI tools often give you false confidence. You see clean, well-formatted code and assume it’s correct. You don’t test it deeply. You merge it. And then you get paged at 3 a.m. because your auth system lets anyone log in as admin.

How to use AI code tools without getting burned

Here’s how real teams are using these tools safely:

- Use AI for boilerplate, not logic: Generate the CRUD endpoints, the API wrappers, the test scaffolding. Write the business rules yourself.

- Never merge AI code without review: Treat every line like it came from a new intern. Run linters, static analyzers, and security scanners. Use tools like Snyk or CodeQL to scan generated code.

- Train your team on prompt engineering: "Write a function" gives you garbage. "Write a Python function that takes a list of user IDs, fetches their profiles from /api/users/[id], filters out inactive users, and returns a JSON array with name and email" gives you something usable. Be specific.

- Enable execution feedback: Newer tools like Copilot Workspace let you run generated code in a sandbox and feed the results back to the AI. This cuts errors by nearly 30%.

- Don’t let juniors use AI unsupervised: MIT found junior devs using Copilot made more mistakes. Use AI to upskill them-but don’t let it replace mentorship.

The goal isn’t to replace developers. It’s to make them faster at the things they’re already good at-and protect them from the things they’re not.

What’s next? The future of AI-assisted coding

GitHub’s 2025 roadmap includes native integration with Jira and Figma. Imagine describing a feature in a ticket, and the AI generates the code, the test, and even the UI mockup. That’s coming.

Google’s Gemini Code Assist now understands Google Cloud services deeply. Amazon’s new CodeWhisperer Security Edition scans for vulnerabilities in real time. These aren’t just features-they’re responses to the biggest criticism: trust.

But the real shift is cultural. Companies are starting to require documentation for AI-generated code. The EU’s AI Act, effective January 2025, forces transparency in critical systems. If your app uses AI to generate authentication code, you have to disclose it.

And the market is exploding. The AI code generation market hit $2.1 billion in 2024. Gartner predicts 80% of enterprise IDEs will have AI assistants built in by 2026. This isn’t a trend. It’s infrastructure now.

Final thought: AI is your co-pilot, not your pilot

AI code tools aren’t here to replace you. They’re here to make you better-if you use them right. The best developers aren’t the ones who write the most code. They’re the ones who write the least code that works. AI helps you get there.

But if you stop thinking, stop reviewing, stop questioning-then you’re not a developer anymore. You’re a clicker. And that’s the real risk.

Can AI-generated code be trusted for production use?

Only if you treat it like untrusted code. AI tools generate code based on patterns, not understanding. They often produce code that passes tests but fails in edge cases or introduces security flaws. Always review, test, and scan AI-generated code with static analysis and security tools before deploying.

Is GitHub Copilot worth the $10/month?

For most developers, yes-if you write a lot of boilerplate code. Copilot reduces context switching and speeds up routine tasks by up to 55%. But if you’re working on complex logic, security-critical systems, or embedded software, the cost may not be worth the risk. Try the free trial first. Use it for 2 weeks on real tasks and measure your time savings versus debugging time.

Do open-source models like CodeLlama perform as well as paid tools?

On benchmarks like HumanEval, CodeLlama-70B matches or slightly beats GitHub Copilot. But performance isn’t everything. Copilot integrates deeply with IDEs, has better documentation, and offers enterprise support. CodeLlama is free and customizable, but you’ll need to manage hosting, updates, and support yourself. Choose open-source if you control your infrastructure. Choose Copilot if you want plug-and-play reliability.

Can AI code tools replace junior developers?

No-and that’s a good thing. AI can generate code faster, but it can’t learn, adapt, or understand business context. Junior developers bring curiosity, problem-solving, and the ability to ask questions. AI doesn’t. The best teams use AI to offload repetitive work so juniors can focus on learning architecture, testing, and debugging.

What programming languages do AI code tools support best?

AI tools are strongest in popular, well-documented languages like Python, JavaScript, TypeScript, Java, and C#. They’re weaker in domain-specific languages (DSLs), embedded systems code (C for microcontrollers), and legacy languages like COBOL. Web developers see the biggest gains because those languages have the most training data. If you’re working in niche or low-code environments, don’t expect much help.

Are there legal risks to using AI-generated code?

Yes. GitHub Copilot is facing lawsuits over potential copyright infringement from training on public GitHub code. Some companies now require developers to avoid using AI tools for proprietary code. Always check your employer’s policy. If you’re releasing open-source software, be aware that AI-generated code might have unclear licensing. When in doubt, rewrite AI output in your own words.

Jane San Miguel

January 30, 2026 AT 07:56And yes, the 55% speedup is real-for trivial tasks. But when you’re building a payment gateway, that speed becomes a liability. The cost isn’t in the subscription fee. It’s in the technical debt you don’t see until your production system is on fire at 3 a.m.