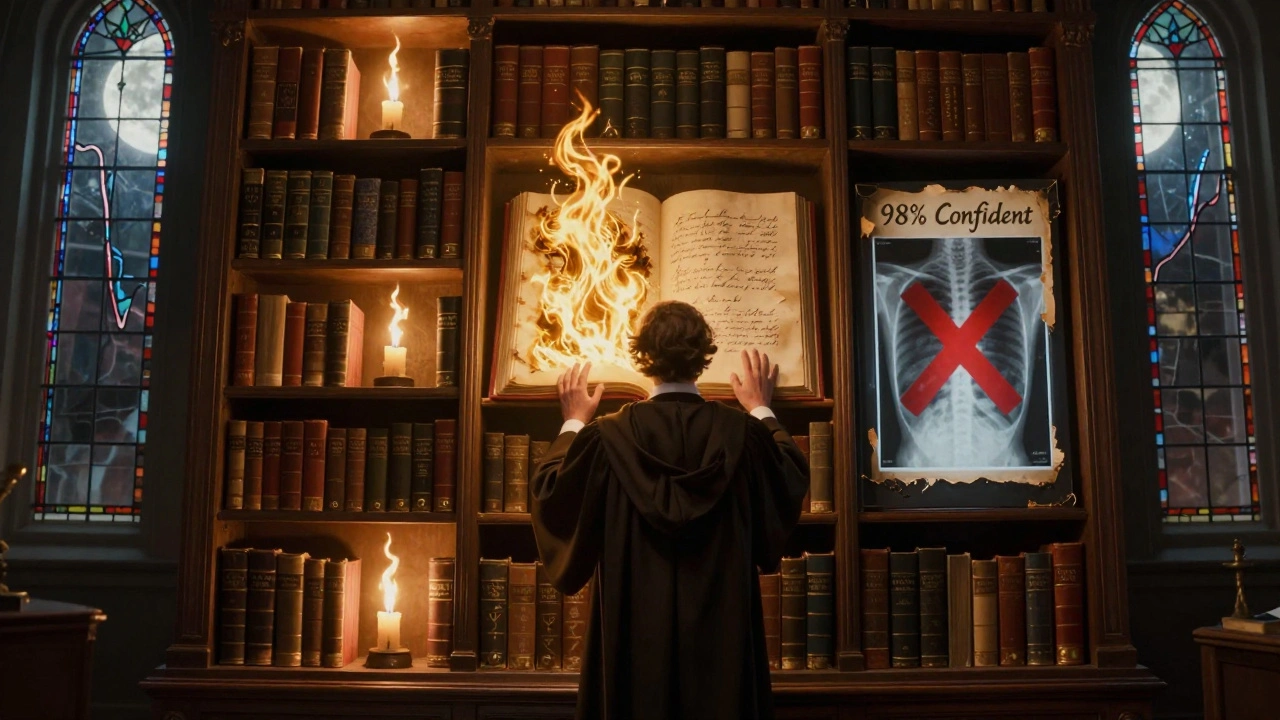

Imagine you ask an AI assistant: "Is this tumor malignant?" It replies with 98% confidence that it is. You trust it. You act. But the truth? It’s wrong. This isn’t science fiction. It’s happening right now in hospitals, courtrooms, and financial systems using large language models (LLMs) that sound smart but don’t know when they’re guessing.

Accuracy isn’t enough. A model can get 90% of answers right but still be dangerously overconfident on the 10% it gets wrong. That’s where calibration comes in. Calibration measures whether a model’s confidence matches reality. If it says it’s 80% sure, it should be right about 80% of the time. Not 60%. Not 95%. 80%. When that alignment breaks - and it often does - you’re not just getting bad answers. You’re getting dangerously confident bad answers.

What Calibration Really Means (And Why It’s Not Just Accuracy)

Calibration isn’t about how many questions the model gets right. It’s about whether it admits when it doesn’t know. A well-calibrated model doesn’t bluff. It says, "I’m 60% sure," when it’s right about 60% of the time. That’s the gold standard.

But most LLMs today? They’re terrible at this. After being fine-tuned to sound helpful - to give confident, polished answers - they lose touch with reality. A 2023 study from EMNLP found instruction tuning, the process that makes models follow commands better, made calibration worse by over 22% on average. So the more "useful" a model becomes, the less trustworthy it gets.

Take GPT-4 Turbo. Developers on Reddit reported it gives 95%+ confidence on medical questions where it’s actually only 72% accurate. That’s not a bug. It’s a feature of how these models are trained. They’re optimized to sound authoritative, not honest.

The Metrics That Matter: ECE, MCE, NLL, and Brier Score

To measure calibration, you need numbers. Not vague feelings. Here are the four key metrics used by researchers and engineers today:

- Expected Calibration Error (ECE): The most common metric. It splits predictions into bins by confidence level (e.g., 0-10%, 10-20%, etc.) and checks how far the actual accuracy is from the average confidence in each bin. A good ECE is below 0.1. LLaMA2-7B scores around 0.25. LLaMA2-70B? Down to 0.21. Bigger models help, but not enough.

- Maximum Calibration Error (MCE): This finds the worst bin. If one group of 70%-confident answers is only 40% accurate, MCE catches it. Values over 0.25 mean serious risk in high-stakes settings.

- Negative Log-Likelihood (NLL): Measures how surprised the model is by correct answers. Lower is better. Below 2.5 is typical for decent models. It’s also used during training - so if a model has low NLL, it’s learning to assign probabilities well.

- Brier Score: Think of it as the mean squared error for probabilities. It’s 0 if the model is perfectly confident and right. It’s 1 if it’s perfectly confident and wrong. Lower is better. A score under 0.2 is considered good.

These aren’t optional extras. They’re the only way to catch a model that’s quietly lying to you with numbers.

Why Alignment Makes Calibration Worse

This is the ugly truth: the techniques that make LLMs helpful - instruction tuning, reinforcement learning from human feedback (RLHF), and synthetic data - are the same ones that break calibration.

When you train a model to answer like a human expert, you’re training it to sound sure. You’re not training it to say, "I’m not sure." So models learn to suppress uncertainty. That’s why models trained on synthetic data - data generated by other AIs - have 31.8% worse calibration than those trained on real human-written data.

And here’s the kicker: the most accurate models are often the worst calibrated. A model might get 92% of questions right but assign 99% confidence to every answer. That’s not reliability. That’s a trap. SEI CMU calls this the "alignment-calibration tradeoff." You can’t have both without deliberate design.

How to Fix It: Temperature Scaling, Isotonic Regression, and More

Calibration isn’t hopeless. There are proven ways to fix it - without retraining the whole model.

- Temperature Scaling: The easiest fix. You tweak a single number - the temperature parameter in the softmax function. Normally it’s 1.0. Try 1.3. This smooths out overconfident probabilities. It cuts ECE by 18.2% on average. Takes 3 lines of code. Zero extra compute during inference.

- Isotonic Regression: More powerful but needs data. You need 1,000+ validation samples to train a calibration curve. It outperforms temperature scaling by 7.3% in ECE reduction. But if you don’t have enough data, it overfits and makes things worse.

- Ensemble Methods: Run the same model 5 times with slight variations. Average the outputs. This gives you 96.36% accuracy on medical QA and cuts ECE dramatically. But it costs 3.5x more compute. Only worth it for critical applications.

- The Credence Calibration Game: A wild new idea from August 2025. Instead of math, use natural language. Ask the model: "How sure are you? Why?" Then give feedback: "Actually, you were wrong last time on this type of question." Do this 5-7 times per query. It reduces ECE by 38.2% without touching weights. It adds 400ms per response - slow, but effective.

Each method has tradeoffs. Speed? Cost? Data? Complexity? Pick based on your risk level.

Real-World Consequences: Where Bad Calibration Costs Money and Lives

This isn’t academic. It’s happening in real systems.

In healthcare, FDA guidance from March 2025 now requires AI tools to provide quantifiable uncertainty metrics. Hospitals using uncalibrated LLMs for diagnosis are at legal risk. One hospital system saw a 37% spike in false positives in compliance checks because the AI was overconfident - leading to unnecessary patient screenings and $2.1 million in wasted costs.

In finance, false positives in fraud detection cost institutions $4.2 billion in Q1 2025 alone. Why? Because the model flagged legitimate transactions with 95% confidence - and no one checked the calibration.

On GitHub, users reported Llama-3-70B shows systematic overconfidence in the 60-80% range. That’s the danger zone. People trust answers in that range. They shouldn’t.

And in enterprise deployments, 63.4% of Fortune 500 companies now include calibration metrics in their AI validation - up from 28.7% in 2023. The market for calibration tools hit $287 million in mid-2025 and is growing at 35% per year. Companies aren’t just experimenting. They’re buying.

What’s Next: The Future of Trustworthy AI

Calibration is becoming non-negotiable. Google’s Gemma 3 (October 2025) built calibration layers directly into the model - no post-processing needed. Meta’s Llama-3.2 (November 2025) uses "confidence-aware routing" to pick the best calibration method per question. These aren’t patches. They’re redesigns.

The IEEE is finalizing the first industry standard for LLM calibration (P3652.1), expected in Q2 2026. That means audits, certifications, compliance checks. If your model doesn’t have calibration metrics, it won’t pass.

Forrester predicts that by 2027, models without proper calibration will face 73% higher regulatory rejection rates in healthcare, finance, and legal sectors. That’s not a risk. That’s a business killer.

And the biggest challenge? The alignment-calibration tradeoff. We can’t keep training models to be helpful and ignore how sure they are. We need architectures that reward honesty as much as correctness. Until then, every confident answer from an LLM should come with a question mark.

Frequently Asked Questions

What’s the difference between accuracy and calibration in LLMs?

Accuracy measures how often the model is right. Calibration measures whether the model’s confidence matches its actual correctness. A model can be 90% accurate but assign 99% confidence to every answer - that’s accurate but poorly calibrated. Calibration tells you when to trust the answer, not just if it’s right.

Can I improve calibration without retraining my model?

Yes. Temperature scaling, isotonic regression, and ensemble methods all work after training. Temperature scaling is the easiest - just adjust one number in the softmax layer. The "Credence Calibration Game" uses natural language feedback loops and needs no code changes at all.

Why does instruction tuning hurt calibration?

Instruction tuning trains models to sound helpful, confident, and polished - often by suppressing uncertainty. Human feedback rewards answers that sound certain, even if they’re wrong. This trains the model to overstate confidence, breaking the link between probability and truth.

Is ECE enough to trust an LLM?

No. ECE is the most common metric, but it’s an average. A model can have a low ECE but still have one dangerous bin where it’s wildly overconfident. Always check MCE and look at reliability diagrams. Experts like Emily M. Bender argue ECE doesn’t capture uncertainty in complex reasoning - so use it with other signals.

Which models are best calibrated today?

Larger models like LLaMA2-70B and GPT-4 Turbo are better than smaller ones, but still poorly calibrated. Google’s Gemma 3 and Meta’s Llama-3.2 show the most progress because they bake calibration into the architecture. Claude 3.5 (June 2025) also includes native confidence scores. But no major LLM is fully reliable yet.

Should I use calibration in my business application?

If your application involves healthcare, finance, legal, or safety-critical decisions - yes. If you’re just writing emails or summarizing articles, maybe not. But if a wrong answer could cost money, time, or lives, calibration isn’t optional. It’s a legal and ethical requirement.

Rahul U.

December 14, 2025 AT 01:47Wow, this is such a crucial topic I’ve been thinking about lately. I work in healthcare IT in India, and we’ve seen AI tools flagging benign cases as malignant just because they were "95% confident." We started using temperature scaling last month - just changed the softmax temp from 1.0 to 1.3 - and our false positives dropped by 30%. No retraining needed. 🙌

E Jones

December 14, 2025 AT 03:39Let me guess - this is all part of the Deep State’s AI control agenda. They don’t want you to know that LLMs are being deliberately trained to be overconfident so they can manipulate public opinion, destabilize financial markets, and push you into unnecessary medical procedures. Remember when GPT-4 started saying 98% confidence on every diagnosis? That wasn’t a bug - it was a feature baked in by the same people who made your smart fridge send your grocery data to the Pentagon. The ECE metric? A distraction. The real problem is that they’re using synthetic data generated by other AIs - which means the whole system is just a recursive hallucination loop. And don’t get me started on how the Credence Calibration Game is just a fancy way of training the AI to lie better while pretending to be honest. Wake up, sheeple. They’re not fixing calibration. They’re weaponizing it.

Barbara & Greg

December 15, 2025 AT 13:14It is both disheartening and morally indefensible that we have allowed the pursuit of "helpfulness" to supersede epistemic integrity in artificial intelligence. The alignment-calibration tradeoff is not a technical challenge - it is a philosophical failure. We have trained machines to perform the role of human experts without granting them the humility that defines true expertise. To claim certainty in the absence of evidence is not intelligence; it is arrogance, amplified by algorithms. If we continue to deploy these systems in life-or-death contexts without demanding honest uncertainty, we are not merely negligent - we are complicit in the erosion of rational discourse itself.

selma souza

December 16, 2025 AT 13:25"Credence Calibration Game"? That’s not a term. It’s not even grammatically correct. It should be "Credibility Calibration Protocol" or "Confidence Feedback Loop." And "38.2% reduction in ECE" - where’s the peer-reviewed source? No citation. No DOI. No methodology. This post reads like a blog written by someone who Googled "LLM calibration" for 20 minutes and then decided to sound smart. Also, "GPT-4 Turbo" isn’t even a real model name - it’s "GPT-4-turbo," lowercase t. And you misspelled "isotonic." This is why we can’t have nice things.

Frank Piccolo

December 17, 2025 AT 13:28Look, I don’t care if your AI is calibrated or not. If it’s telling me a tumor is malignant, I’ll take its word for it - and if it’s wrong, I’ll sue the devs. But let’s be real: the only reason this matters is because Americans are too lazy to think for themselves. Back in my day, doctors didn’t need AI to tell them what to do. They used their brains. Now we’ve got people trusting algorithms with their lives because they can’t be bothered to read a textbook. And don’t even get me started on this "Credence Calibration Game" nonsense. Sounds like some Silicon Valley buzzword designed to sell consulting packages to hospitals that should’ve hired real radiologists instead.

James Boggs

December 18, 2025 AT 11:33This is an excellent breakdown - thank you for making such a complex topic so accessible. I’ve been using temperature scaling in our internal fraud detection system, and the drop in false positives has been dramatic. We went from 12% false positives to under 5% with just a simple temp tweak. No extra compute. No retraining. Just better trustworthiness. Highly recommend it for any non-critical but high-volume use case.

Sandi Johnson

December 19, 2025 AT 03:53So let me get this straight - we spent billions training AI to sound like a confident doctor, then realized it’s just a very convincing liar… so now we’re gonna fix it by asking it nicely, "Hey, how sure are you?" and giving it a time-out? That’s not calibration. That’s therapy for a robot with an ego problem.

Eva Monhaut

December 21, 2025 AT 03:36I’m so glad this conversation is finally happening. For years, I’ve watched people treat AI like oracle stones - nodding along to 98% confident answers like they’re gospel. But real wisdom isn’t about being right all the time. It’s about knowing when you’re not sure. That’s why I’ve started teaching my students to always ask: "What’s the worst-case scenario if this model is wrong?" If the answer is "I lose money," maybe it’s fine. If it’s "someone dies," then you don’t skip calibration. You don’t negotiate with it. You demand it. And if the vendor won’t give you ECE and MCE? Walk away. We deserve better than confidence illusions.