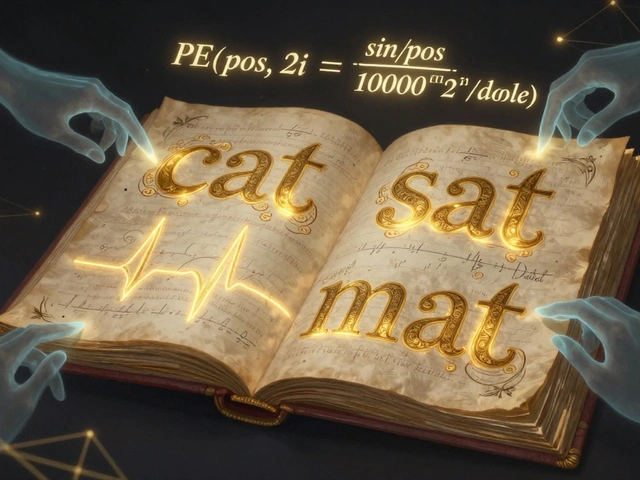

Tag: pipeline parallelism

Model Parallelism and Pipeline Parallelism in Large Generative AI Training

Pipeline parallelism enables training of massive generative AI models by splitting them across GPUs, overcoming memory limits. Learn how it works, why it's essential, and how it compares to other parallelization methods.