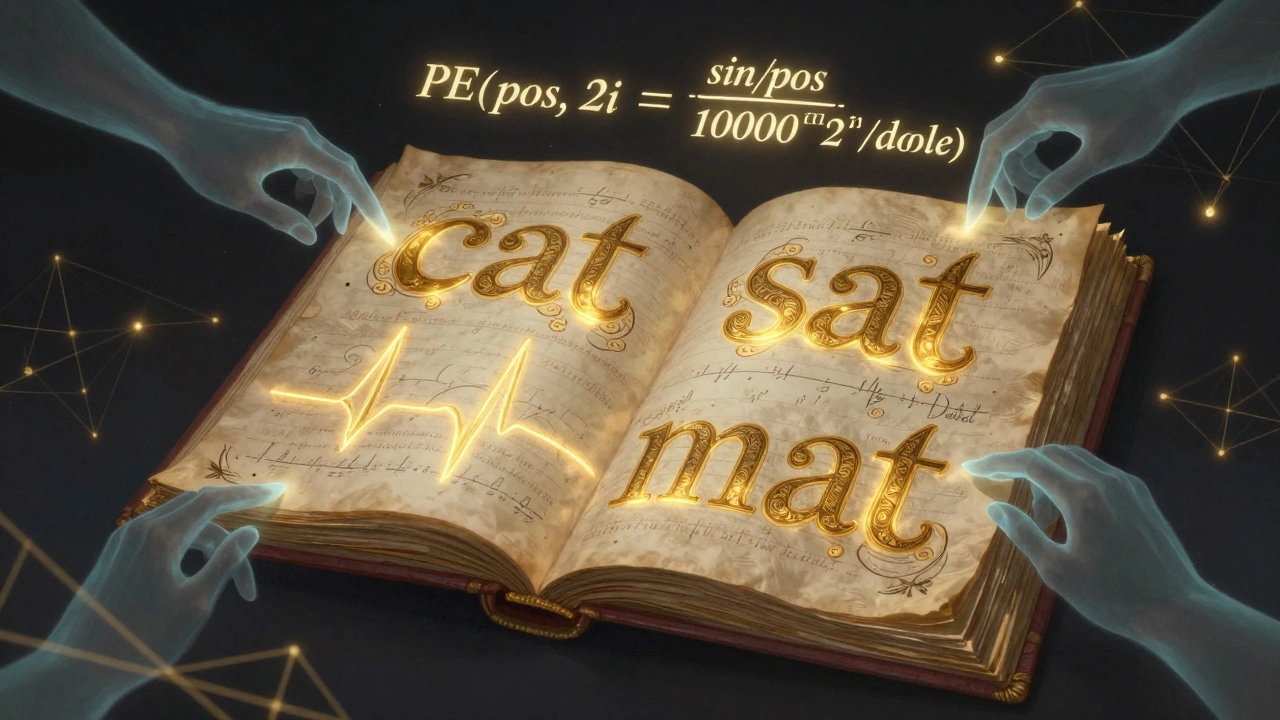

Tag: sinusoidal encoding

Positional Encoding in Transformers: Sinusoidal vs Learned for Large Language Models

Sinusoidal and learned positional encodings were the original ways transformers handled word order. Today, they're outdated. RoPE and ALiBi dominate modern LLMs with far better long-context performance. Here's what you need to know.