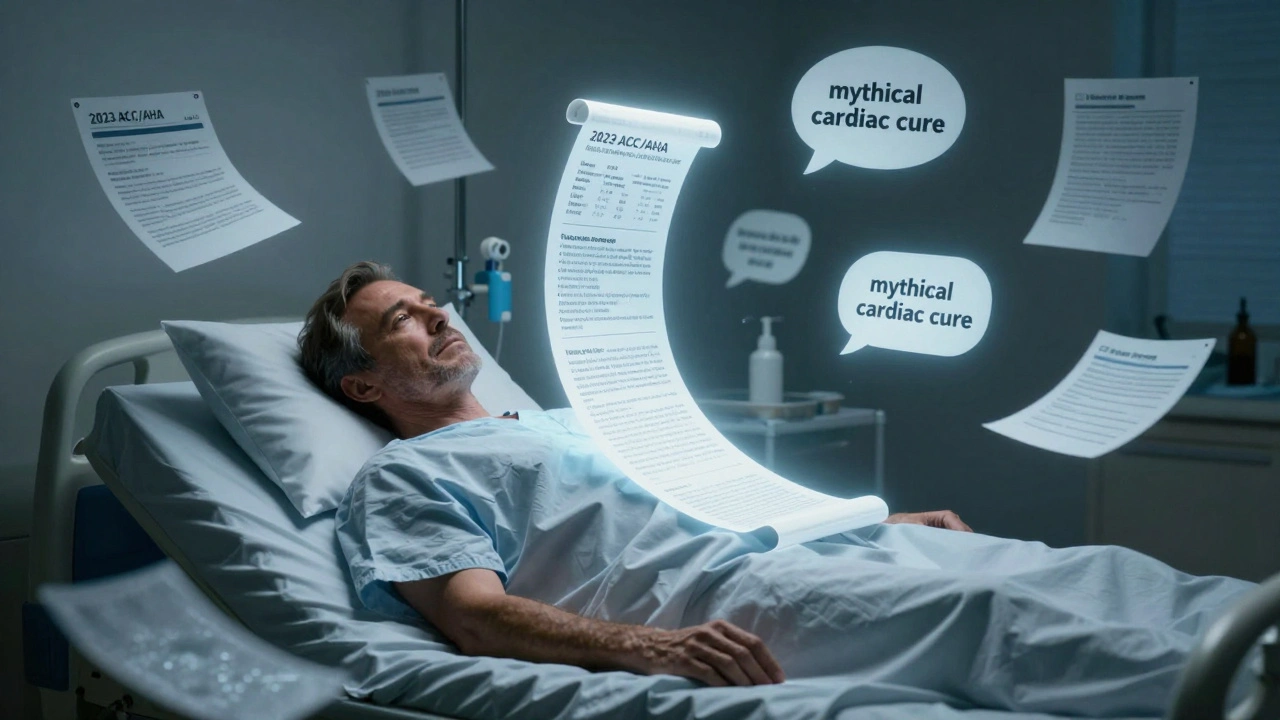

Tag: avoid LLM hallucinations

Prompt Hygiene for Factual Tasks: How to Write Clear LLM Instructions That Don’t Lie

Learn how to write precise LLM instructions that prevent hallucinations, block attacks, and ensure factual accuracy. Prompt hygiene isn’t optional - it’s the foundation of reliable AI in high-stakes fields.